Weather Link - An experiment with ASP.NET Core

There are times when I struggle with focusing more on the management side rather than the development. I do think it’s a benefit to maintain some up-to-date technical skills to help with technical guidance and decisions. For on-the-job learning, I’ve been looking at Scala in relation to work and then expanding to Lagom. However, for personal learning, I tend to stick to the .NET stack. In the .NET world lots of things have been changing recently.

Technology

.NET Core hit 1.0 and ASP.NET Core hit 1.0, but best of all, they are open source! For Windows based platforms (with some restrictions), Visual Studio Community is an option, but for everything else (including OSX and Linux), we now have Visual Studio Code

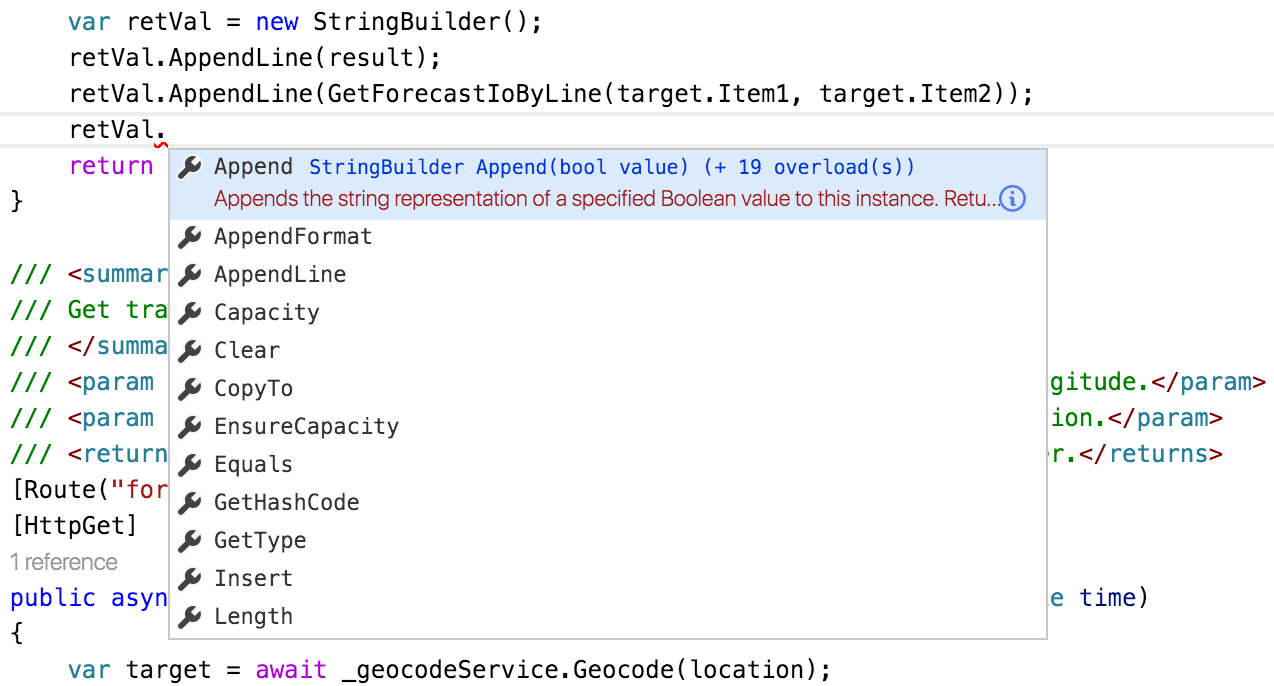

So to get started, I went with Visual Studio Code so I could see how the new tool worked. Overall, I really liked it and am writing this post on it now as well. I went with the Insiders build to get an in-editor terminal and tabs, but those should land in the standard version soon if not already. It provides the familiar Intellisense like Visual Studio and much of the same functionality .NET developers are used to.

Setup

I’m going to highlight some of the parts I found interesting, but the whole source is available on GitHub. The README is a bit lacking and I apologize for that.

Program

Those of your familiar with running ASP.NET in IIS will be somewhat surprise by Program.cs

public static void Main(string[] args)

{

var config = new ConfigurationBuilder()

.AddCommandLine(args)

.AddEnvironmentVariables()

.Build();

var host = new WebHostBuilder()

.UseConfiguration(config)

.UseKestrel()

.UseContentRoot(Directory.GetCurrentDirectory())

.UseIISIntegration()

.UseStartup<Startup>()

.Build();

host.Run();

}

I have UseKestrel() as well as UseIISIntegration(). This is due to running the services on Azure.

Even though I was using the self-hosted Kestrel server, IIS Integration has to be enabled for Azure to serve the content.

You can also see Startup referenced here and that’s where more of the new fun shows up.

Startup

The Startup class may looks like a bit of magic at first and heavily uses the Builder Pattern.

Configuration

In Startup there’s a few interesting parts. First, the configuration:

public Startup(IHostingEnvironment env)

{

var builder = new ConfigurationBuilder()

.SetBasePath(env.ContentRootPath)

.AddJsonFile("appsettings.json", optional: true, reloadOnChange: true)

.AddJsonFile($"appsettings.{env.EnvironmentName}.json", optional: true)

.AddEnvironmentVariables();

Configuration = builder.Build();

}

I left the env.EnvironmentName overrides in, but I don’t personally use them currently. What I really like is that right out of the box, I can load a settings file appsettings.json and then use environment variables to override the values. This makes keeping secrets out of git trivial which is a huge plus for open source work. See Working with Multiple Environments for more details. To setup the overrides, on my local machine and Azure instance I have (with actual values added):

export DarkSkyApiKey=TOPSECRET

export GoogleMapsApiKey=TOPSECRET

export SlackTokens__0=TOPSECRET

export SlackTokens__1=TOPSECRET

This shows you a top level element and how arrays work. If you needed to have sub-elements, you would use the same __ syntax to show the hierarchy.

Services

The next section shows how to configure the Dependency Injection.

// Add custom services

services.Configure<WeatherLinkSettings>(Configuration);

services.AddTransient<IForecastService, DarkSkyForecastService>();

services.AddTransient<ITrafficAdviceService, WeatherBasedTrafficAdviceService>();

services.AddTransient<IGeocodeService, GoogleMapsGeocodeService>();

Here the setup defined in Configuration is filled in with the WeatherLinkSettings object that represents the data. Following that is the real fun. It establishes transient dependencies for each of the interfaces I created. I also configure Swagger via Swashbuckle (specifically the Ahoy project to port it to ASP.NET Core.

// Configure swagger

services.AddSwaggerGen();

services.ConfigureSwaggerGen(options =>

{

options.SingleApiVersion(new Info

{

Version = "v1",

Title = "Weather Link",

Description = "An API to get weather based advice.",

TermsOfService = "None"

});

options.IncludeXmlComments(GetXmlCommentsPath());

options.DescribeAllEnumsAsStrings();

});

Runtime

Finally, we get to the Configure method which sets up the actual HTTP request pipeline.

public void Configure(IApplicationBuilder app, IHostingEnvironment env, ILoggerFactory loggerFactory)

{

loggerFactory.AddConsole(Configuration.GetSection("Logging"));

loggerFactory.AddDebug();

app.UseStaticFiles();

app.UseMvc();

app.UseSwagger();

app.UseSwaggerUi();

}

This sets up logging to the console, serving static files, the default MVC handling, and finally Swagger.

Organization

Below the top level files already discussed there are a few others, project.json, Dockerfile, etc. More interestingly is the src directory. Inside that is where all the rest of the program source code is.

Models

Models are very familiar if you did ASP.NET MVC before. They are POCOs to represent your data.

ExtensionMethods

Another friend from previous versions, Extension Methods are often helpful to have. I had to shamelessly copy one from MoreLINQ as it does not support .NET Core yet.

Controllers

Again, if you are familiar with ASP.NET MVC this will not be a surprise. Controllers define a set of actions the application can perform. The new elements here are the baked-in dependency injection. If you’re a bit behind the times in C#, async and await are used here as well as routing attributes that carried over from the WebAPI days.

[Route("{latitude}/{longitude}")]

[HttpGet]

public async Task<string> GetTrafficAdvice(double latitude, double longitude)

Overall, the controller is fairly boring (as it should be).

Services

Now we get to the really fun part, the services. Following standard naming conventions the classes starting with I are interface declarations.

Geocoding

GoogleMapsGeocodeService uses the Google Maps API to convert a string into a latitude and longitude. It does the processing with the ever popular Json.NET. I was lazy and just returned a Tuple instead of creating a geolocation model class. I also just used the JObject directly combined with null-conditional operators to follow the concept of the Robustness principle and be liberal in what is received. If the elements expect are present, it returns a value regardless of whatever else is returned and I barely use any of the data so I skipped creating an object for it.

var location = responseJObject?["results"]?.First()?["geometry"]?["location"];

var recievedLatitude = location?["lat"];

var recievedLongitude = location?["lng"];

Forecasts

I love Forecast.io and their hyper-local API is what powers WeatherLink’s forecast service. Again, the actual API call is made through HttpClient and parsed with Json.NET.

I do forcibly set the numeric format to be N4 on the URL parameters to avoid issues, but it seemed like the DarkSky API handled it without that.

For this web service, I do deserialize directly to an object for parts of the response, but not the entire thing for efficiency reasons. After selective deserializing, I combine the results into a Forecast object.

Traffic advice

This is the meat and potatoes of the service. It’s also where you will find wonderful comments like:

//TODO: I hate this, fix it

I’m a big proponent of make it work first and make it better later. The methods are bit long for my preferences right now but they are basically string builders that operate on the results from the forecast service. I think most of the code is fairly readable but I did leave a few magic numbers in like taking the 5 closest times around your target time to compare to for leaving.

var range = forecasts.Skip(afterTarget - 2)

.Take(5)

.Where(x => Math.Abs((DateTimeOffset.FromUnixTimeSeconds(x.time) - targetTime).Hours) <= 1)

.ToList();

or a commute time of 20 minutes (I love Buffalo):

var bestTimeToLeaveWork = forecasts.Any() ? forecasts.MinimumPrecipitation(20)?.FirstOrDefault() : null;

Response times

This is fairly limited profiling, but I generally see 500ms - 800ms response times for the geocoding end point as well as the direct latitude and longitude endpoint. Based on that, it seems like making the geocoding API request is adding a negligible delay and that the majority of that time is spent in my service. I know my current math is a bit cumbersome so I might be able to reduce that a bit more.

Summary

Overall, I like the new features in ASP.NET Core and with this basic example I didn’t hit any of the current restrictions. However, what I think the best part is that it now runs anywhere (mostly) and can be developed in entirely free tools. This is a great step forward and I can’t wait to see what else Microsoft, and the community, is going to do with the platform.